Featured

Table of Contents

- – Understanding the Future of Online Retail: Adv...

- – Identifying the Ideal Solution Framework for I...

- – Understanding the Technical Needs for High-Tr...

- – The Role of Bespoke Aesthetics in Current Web...

- – Tracking Success: Important Measurements for...

- – Why Cross-Platform Design Impacts Engagement...

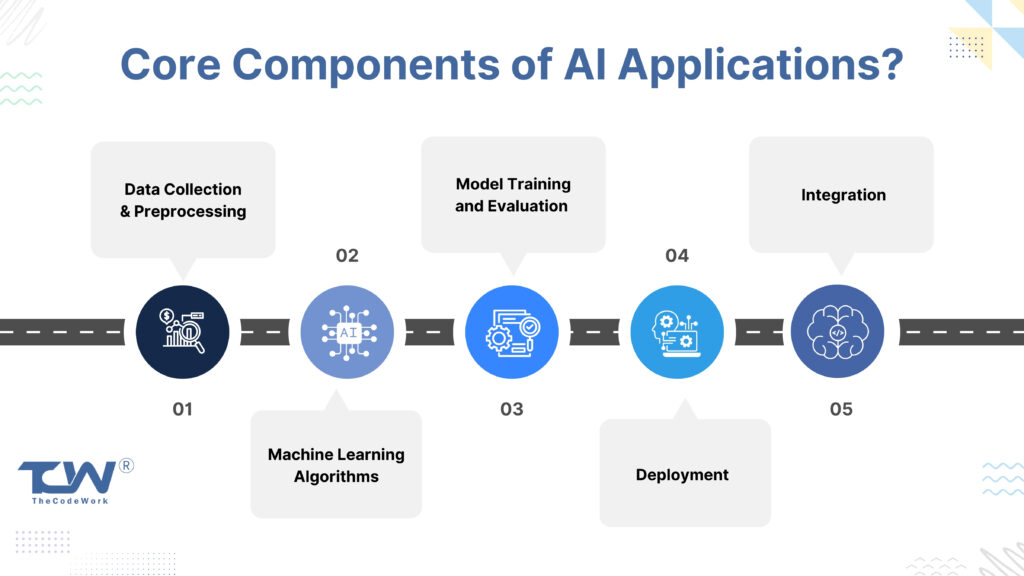

It isn't a marathon that requires research study, assessment, and trial and error to figure out the role of AI in your organization and guarantee secure, ethical, and ROI-driven option release. It covers the crucial factors to consider, challenges, and facets of the AI project cycle.

![How to Develop AI Software [Step-by-Step Guide]](https://ddi-dev.com/uploads/ai-development-best-practices.png)

Your objective is to establish its duty in your operations. The most convenient means to approach this is by going backwards from your objective(s): What do you intend to accomplish with AI execution? Believe in terms of specific problems and measurable outcomes. Fifty percent of AI-mature companies rely upon a combination of technological and company metrics to examine the ROI of applied AI usage situations.

Understanding the Future of Online Retail: Advances alongside Outlooks

Choose usage cases where you've already seen a convincing demo of the modern technology's possibility. In the finance sector, AI has actually proved its advantage for fraudulence detection. Maker learning and deep understanding versions outmatch standard rules-based fraud detection systems by providing a lower rate of incorrect positives and revealing far better cause acknowledging brand-new types of fraud.

Researchers concur that artificial datasets can raise personal privacy and depiction in AI, particularly in sensitive markets like medical care or financing. Gartner predicts that by 2024, as long as 60% of information for AI will be artificial. All the acquired training data will then need to be pre-cleansed and cataloged. Usage consistent taxonomy to establish clear information lineage and afterwards check exactly how various users and systems utilize the provided data.

Identifying the Ideal Solution Framework for Individual Organization Requirements

Additionally, you'll have to split readily available information right into training, recognition, and test datasets to benchmark the industrialized version. Mature AI growth groups complete most of the data monitoring refines with information pipes a computerized sequence of steps for data intake, processing, storage, and succeeding gain access to by AI versions. Instance of data pipeline architecture for information warehousingWith a durable information pipe design, business can process countless information records in nanoseconds in close to real-time.

Amazon's Supply Chain Financing Analytics team, consequently, optimized its data engineering workloads with Dremio. With the present setup, the business set brand-new extract transform tons (ETL) work 90% faster, while inquiry rate raised by 10X. This, consequently, made information a lot more obtainable for thousands of concurrent users and equipment learning tasks.

Understanding the Technical Needs for High-Traffic Shopping Websites

The training process is intricate, also, and prone to problems like sample performance, stability of training, and catastrophic interference issues, amongst others. Successful business applications are still couple of and mostly originated from Deep Technology firms. are the backbone of generative AI. By utilizing a pre-trained, fine-tuned design, you can swiftly train a new-gen AI formula.

Unlike typical ML structures for all-natural language processing, structure designs require smaller sized labeled datasets as they currently have embedded understanding throughout pre-training. That stated, foundation versions can still generate unreliable and inconsistent results. Specifically when applied to domains or tasks that differ from their training information. Training a foundation model from scratch additionally needs substantial computational resources.

The Role of Bespoke Aesthetics in Current Web Development

Successfully, the design does not create the wanted results in the target environment due to differences in specifications or setups. If the model dynamically optimizes rates based on the total number of orders and conversion prices, yet these criteria significantly change over time, it will no longer give exact pointers.

Instead, most keep a database of model versions and perform interactive design training to considerably enhance the quality of the last item., and just 11% are effectively deployed to manufacturing.

You benchmark the interactions to identify the model variation with the highest precision. A model with also couple of attributes has a hard time to adjust to variations in the data, while too several attributes can lead to overfitting and even worse generalization.

Tracking Success: Important Measurements for Platform Initiatives Projects

But it's additionally one of the most error-prone one. Just 32% of ML projectsincluding rejuvenating models for existing deploymentstypically get to implementation. Deployment success throughout different equipment finding out projectsThe factors for failed implementations vary from lack of executive assistance for the job due to vague ROI to technical troubles with making certain secure version procedures under boosted lots.

The team required to ensure that the ML design was highly available and served extremely personalized suggestions from the titles available on the customer gadget and do so for the platform's numerous individuals. To guarantee high efficiency, the team decided to program model racking up offline and afterwards offer the results once the customer logs right into their tool.

Why Cross-Platform Design Impacts Engagement Rates

It also helped the business optimize cloud facilities costs. Eventually, successful AI design deployments come down to having effective procedures. Simply like DevOps concepts of constant combination (CI) and continuous delivery (CD) boost the release of routine software application, MLOps increases the rate, efficiency, and predictability of AI model implementations. MLOps is a collection of actions and devices AI development teams utilize to create a sequential, computerized pipe for launching brand-new AI remedies.

Table of Contents

- – Understanding the Future of Online Retail: Adv...

- – Identifying the Ideal Solution Framework for I...

- – Understanding the Technical Needs for High-Tr...

- – The Role of Bespoke Aesthetics in Current Web...

- – Tracking Success: Important Measurements for...

- – Why Cross-Platform Design Impacts Engagement...

Latest Posts

Unpacking the Impact of AI-Driven Website Creation for Modern Businesses

The Security Requirements

Why Customer Acquisition Methodologies Adapt for different Sectors via Web Solutions

More

Latest Posts

Unpacking the Impact of AI-Driven Website Creation for Modern Businesses

The Security Requirements

Why Customer Acquisition Methodologies Adapt for different Sectors via Web Solutions